| Home | People | Research | Projects | Teaching | Theses | Downloads | Links | Contacts |  |

|

|

|

|||||||||||||

|

||||||||||||||

|

||||||||||||||

|

Research

|

|||||||||||||||||||||||||||||||||

|

Motion analysis and scene understandingMotion detectionOUTLINE Understanding what happens in a scene is a complex mechanism, even for human being, that involves several sensorial organs and brain activities. The first stage of scene understanding originates from detecting motion activities, that is detecting "changes" in the scene. As a matter of fact, "changes" are due to the following different causes:

- (a) robustness to false changes or uninteresting motion - (b) computational efficiency, that is the capability to process a high number of frames per second In literature, a wide range of solutions to the change detection problem have been proposed, each one representing a different trade-off point between (a) and (b). However, the most effective approaches provide that each frame is compared with a reference background. This method requires that a statistical model of the background static scene is built, even while objects are moving, and kept up to date. Fig. 1 shows a current frame on the left, in the middle the background that is generating even while vehicles are passing and on the right the reference background generated (here, after about 10 secs). Changes are then detecting by subtracting the current frame from the background. The changing pixels are those whose value in the difference image is above a given threshold (Fig. 2, left).

Fig. 1: The generation of a background static scene. Left: Current frame. Middle: on going background generation, while objects move. Right: the reference background. Objects' segmentation aims at identifying object's contour and therefore the pixels they are made of and it represents the earliest step for any subsequent analysis of objects' motion. In fact, the more precise the segmentation, the more accurate the visual properties of the object extracted automatically frame by frame. To this purpose, we have also developed an effective shadow detection algorithm to remove attached shadows so to segment finally the real object only.

Fig. 2: The segmentation of the moving objects. Left: the changing pixels, after thresholding. Middle: the moving objects, after denoising and morphological operations. Right: blob's labelling, the last segmentation step. Denoising and morphological operations (Fig. 2, middle) allow aggregating more pixels in one blob (i.e. one object, or more, often an aggregate of several objects that occlude one other). After that, separate aggregate of connected pixels are labelled (Fig. 2, right, using pseudo-colors just for visual convenience), so that they could be successively used as a mask to identify the real object in the current frame (Fig. 1, left).Of course, the most challenging scenario for detecting motion is in outdoor environments. In fact, differently from indoors, changes in the scene could be not related to human activities and hence more difficult to manage. For instance, while indoors the illumination can be kept unchanged, thanks to artificial lights, outdoors the scene illumination changes with the time of day and weather conditions. Besides, unwilling motion may be originated by natural phenomena, such as rain or snow, or by branches moved by wind. METHOD

We have conceived a novel methodological approach that faces all the above issues (1. - 6.) explicitly.

In particular, our change detection is based on a coarse-to-fine

strategy implementing a two-stage background subtraction [S0].

In the preliminary stage we use the algorithm we conceived to build, fast and reliably, a background scene

even in the presence of moving objects [S6] (see Fig. 1) .

At the first stage, the background is subdivided in blocks (Fig. 3), the median is computed for each block,

practically achieving a background at a lower resolution. Accordingly, this permits to have a

background retaining a high robustness to illumination changes. Also, this brings a

benefit in terms of speed, by processing a reduced number of pixels.

Fig. 3: Block-based backgrounds. From left to right: background with square blocks of 4 and 8 pixels side and the respective ×4 and ×8 downsized backgrounds. Fig. 4 shows the input and the outcome of the different stages of our algorithm using a challenging indoor sequence, with a very low contrast, as a case study. On the first row one can see the block based background (left), the original frame at full (middle) and coarse (right) resolution. Firstly, an efficient block-based (coarse) background difference is performed on images at a low resolution (Fig. 4, second row), thus achieving reliable and tight coarse-grain super-masks (right) that permit to filter out most of the possible false changes.

Fig. 4: First row: the block-based background (left); the sample frame at full resolution (middle) and block-based (right). Second row: coarse level detection: binary change mask after thresholding (left) and filling (middle) and the final block-based change mask (right). Third row: fine level detection: output of the background subtraction (left), after denoising, filling and labelling (middle), and the final segmented gray level object (right). Within these reliable coarse grain masks, the second stage can perform an accurate fine-level detection in real time (Fig. 4, third row), because of the reduced area where it works. However, this is just one of the advantages yielded by our approach. In fact, the complement of these masks can be used to infer reliable and up-to-date information regarding changes due to cases 1., 2. or 3. In particular, this permits to apply our tonal registration algorithm [M1][M2] on the current fine-level background, thus leading current frames and background to have the same photometric range. In this way, the thresholded background detection is already of a good quality (Fig. 4, third row, left) and it is quite easy to achieve a perfect segmented object (right), after denoising, filling and labelling [S2] operations (middle).Finally, the complement of the reliable coarse-masks is used also to carry a robust selective background updating procedure. RESULTS

Tests were performed on several sequences, taken by different cameras and sampled at different resolutions.

What shown here aims at emphasizing the quality of the detection introduced by using a block-based background

capable to be robust to illumination changes due to time-of-day or environmental causes. The improvement in

terms of computing performance is easily deducible.

The results presented in Fig. 5 concern three challenging sequences. The first row refers to an indoor

sequence where the gray levels of the moving person's (light) shirt and left hand in the current frame

(left) disguise themselves as the background wall and desk (middle), respectively. Nevertheless, the person

is segmented following perfectly his borders (right). The second row of Fig. 5 regards an outdoor sequence where,

besides camouflage problems (middle), moving hedges and waving trees of the background (left) usually produces

a lot of changes regions in background difference. However, the block-based difference reduces size and number

of changes due to oscillating objects and makes the operation robust even to such natural phenomena.

Fig. 5: First row: indoor sequence, with camouflage phenomena. Second row: outdoor sequence, with moving trees and hedges in the background. Third row: motion detection in a dark, low-contrasted, scene (left); an indoor sequence, with quite uniform walls and floor (middle); a traffic sequence, containing vehicles having very a small size, well detected and segmented (right). Finally, the third row of Fig. 5 shows three frames extracted by as many sequences, two of them being indoors with moving people and the third one referring to a busy highway. On the left, the low-contrasted scene does not prevent the algorithm to segment the people with a high accuracy, how one can be seen looking at the contour. The middle frame shows how even the details of moving people - eg. arms, legs and shoes - are segmented accurately, after removing shadows. The right frame probably refers to the most challenging situation where the depth of field leads moving vehicles to change their size and become very small when far.Our motion detection algorithm has been trained on dozens of sequences and all the parameters involved have been set up using a Distributed Genetic Algorithm [A1][A2][A3] running on a cluster of 18 PCs. This setup has shown to be of general purpose for all the sequence involved so that, for instance, the threshold parameters for the block- and the pixel-based background difference in our algorithm is fixed. It is worth noticing that the algorithm has been adopted by some important commercial video analytics systems. Removal of moving shadowsOUTLINE

Recognizing shadows in a scene is generally a hard task. A person can reasonably

recognize a shadow once the scene-geometry and the characterization of light

throughout the scene is known. We must transfer this knowledge to the machine so

that it can also confidently detect shadows.

The basic question a research should answer is:

The main problem encountered in designing a system for outdoor motion detection is the reliability of detecting targets in spite of changes in illumination conditions and shadowing. The detection of shadows as legitimate moving regions may create confusion for the subsequent phases of analysis and tracking. In fact, they can alter the shape of the object and, in general, its properties. Therefore, one of the achievements of the system is to successfully recognize and remove the moving shadows attached to objects. Fig. 1 shows two enlarged samples of shadows: on the left, one can see a dark kernel and well-contrasted borders, while on the right the border is almost undefined and shaded with a low contrast.

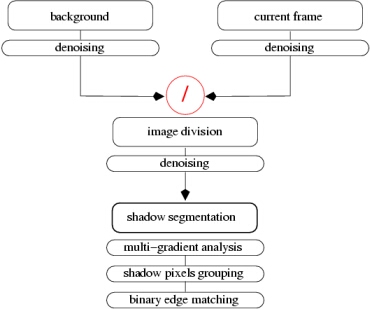

Fig. 1: Enlarged samples of high- (left) and low-contrasted (right) shadow. METHOD Many algorithms detecting shadows take into account a priori information, such as the geometry of the scene or of the moving objects and the location of the light source. We aim to avoid using such a knowledge in detecting shadows [S1][S4]. Nevertheless, we exploit the following sources of information:

Fig. 2: General scheme for the shadow detection algorithm.

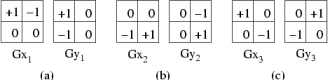

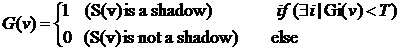

Fig. 3: Background (left), current frame (middle), outcome of division in pseudo-color (right). In spite of a sort of redundancy of these gradient operators, the union of these three convolutions permits to find out all the uniform regions of the image S. The binary image G(v) is generated by pruning some of the false shadow pixels of image S, according to the condition of Fig. 4, right. That is, a pixel v∈S belongs to a likely shadow only if there is at least one gradient Gi(v) standing below a given threshold T. This is not a very sensitive threshold, since in this stage false shadows are still admitted.

Fig. 4: Gradient masks (left) and the condition to generate the binary image G(x) (right).

Fig. 5, top-left, shows the binary outcome G of the thresholding operations performed on the gradients

of Fig. 3. At this stage, our goal is not loosing true signals. To this purpose, we have performed an

OR thresholding, that is having at least one direction for which the gradient value is bounded

has been considered enough. We could have performed an AND operation in order to reduce the amount of false

positive signals. Nevertheless, we could have also reduced the true signals, mainly along the

shadow borders. The last stage will take care of reducing false signals.

Fig. 5: The image R after thresholding (top-left), subsequent smart grouping (top-middle), the scheme for false positive reduction (top-right), with related outcome (bottom-left) and the segmented image (bottom-right). In Fig. 5, top-left, there are a lot of not connected regions, but the previous thresholding operations have preserved coarse areas. Then, we use the structural analysis we developed to perform smart grouping, aiming at removing agglomerate of pixels not fulfilling a given compound structure [S5][S8], while preserving the definition of the original structure of the image. Basically, our structural analysis performs a smart dilate jointly to a connection operation among yet coarse agglomerate of pixels, while permitting to keep a high definition of the existing "good" structures, as shown in Fig. 5, top-right [S7].Once the likely shadow regions have been connected, it is necessary to find out the true shadows. This step is also known as the False Positive Reduction (FPR) step. In fact, we must clean those regions that in the previous steps had the same appearance of true shadows. These, usually, are inner parts of blobs, like regions B, C, D of Fig. 5, bottom-left, that exhibit same photometric properties of true shadows (region A). Therefore, the FPR is essentially based on geometric considerations. All the regions are inside the blobs. Two kinds of regions are discarded: the ones far from the blob's boundary, and the smallest ones. The basic assumption is that a true shadow must be a large shadow-like region near the boundary. Regions removal is accomplished by computing the percentage of the blob's border (red border lines) shared with the boundary of the homogeneous regions just selected (dark blue areas). The percentage p has been established to be about 30% with respect to the total perimeter (red solid and dash lines) length. Practically speaking, the homogeneous regions which share the boundary with the blob's border for more than 30% are considered to be shadows. By looking at the example of Fig. 5, bottom-left, the region D is discarded because it is too far from the border. Regions B and C are excluded because the segment of border near to the dash red line shared with the blob is far less than p=30%. Only the region A will pass this thresholding phase. Shadows are then removed by considering the outer shadow border (red solid line of Fig. 5, bottom-left) and following the inner shadow border joining the two cross signs. The outcome of the FPR stage in the example we has used is shown in Fig. 5, bottom-right. RESULTS

Our method provides that blobs are segmented accurately and our motion detection

does it. Nevertheless, in case of no shadows or a bad segmentation the method does

not alter the results, because in those cases the histogram is quite sparse and

no actions are taken.

Nevertheless, all the parameters have been setup using a training sequence and a genetic

algorithm [A1] and then tested using ten difference sequences among indoors and

outdoors, being the latter the most challenging. In fact, the changing lighting conditions

make shadows changing heavily their appearance during the day.

Fig. 6: First row, outdoor scene: the binary outcome of the motion detection before shadow removal (left) and after segmentation, with labelled blobs (middle), and the final outcome after shadow removal (right). Second row, indoor scene: the binary (left) and the grey level (middle) outcome of the motion detection, still containing shadows, and the final outcome after shadow removal (right). Our shadow detection and removal algorithm represents an important stage that improves the effectiveness of our motion analysis. In fact, although the shadows we consider are really moving objects, they often do not represent the object of interest. Besides, the features of the shadows have a low uniqueness and do not permit to distinguish different objects, whether they are vehicles of people.Also thanks to the high definition of blobs coming out from our motion detection, we manage to remove most of the moving shadows attached to the objects thus achieving the object of interest only. At the end, this also helps the tracking and motion analysis stage, because shadows may unpredictably alter the shape and, in general, all the features of the blob being tracked, thus misleading the tracker that could extract wrong information or even loose the object. People/Object tracking and event detectionOUTLINE

The most relevant information of a video sequence arises from the analysis of moving items, whether

they are people, vehicles or, generally, objects. Human beings can easily track a given object with

one's eyes (even in the presence of hundreds of moving objects), almost independently of its changes

in speed, shape or appearance. That happens because human brains can extract temporal "invariants"

from the moving object and follow them across time.

Using a stereo-camera or multiple cameras permits to extract 3D information and bound this phenomenon.

Nevertheless, the reduced field of view needed with the present technology to attain a proper accuracy

in z-dimension limits the use of 3D tracking mainly in indoor environments or machine vision applications.

Fig. 1: Left: moving vehicles are detected as being one blob from motion detection algorithms. Right: tracking algorithms aim to split one blob in its component objects (in the image, highlighted by the oriented bounding boxes and the ID numbers). 2D cameras still represent the reference technology in most of the real world cases. However, when using a classical camera the spatial position of each object is lost and what we see is just a projection of the scene on the sensor plane. Consequently, different objects occluding with each other are detected as being one moving object (Fig. 1, left), thus making the overall problem yet more challenging. Therefore, after moving items have been detected frame by frame (this is usually performed by comparing each current frame with a reference static scene), the hardest task is to find out for each object the best features to track. As a matter of fact, multi-dimensional feature vectors must be considered to reduce degrees of freedom and uncertainty accordingly, with the purpose of finding out the different objects composing the blob.METHOD The underlying technology we have developed is so robust as to work not just with rigid bodies (e.g. vehicles) but even with non-rigid ones (people are the most significant example). The algorithms developed manage to keep track of a person moving in a crowded situation or vehicles crossing urban intersections by exploiting an ensemble of innovative strategies. Non-supervised artificial neural networks (Self-Organizing Map, SOM), one for each object, are used to cluster the features correctly tracked, thus helping to remove tracking errors (Fig. 2). We have also extended the well-known concept of Gray Level Co-occurrence Matrix (commonly used in image-based texture analysis) and devised the Spatio-Temporal Co-occurrence Matrix (STCM) to study the time correlation between couples of pixels at different distances, in order to assess the rigidity of the body being tracked [S3]. This is used jointly with SOM to prune tracking errors.

Fig. 2: From left to right: tracking without and with SOM; objects segmentation using just local or global information through a region growing approach; tracking of vehicles occluded by static objects: they do not split and remain one object. At a higher level, the real-time tracking system we have conceived relies on local and global information, corner points and whole object's features (Fig. 2). Object's corner points of are visible even in case of partial occlusion, although they could partly loose their significance. On the other side, global blob's information becomes useful, for instance, to improve object identification during dynamic occlusion or even necessary to guess object properties after splitting or missing due to inaccuracies of motion detection or static occlusions. In practice, as the vector's elements we address geometrical (e.g. perimeter), photometrical (e.g. the "corner" points) and statistical (e.g. features' temporal distribution) features.Finally, the outcome of the tracker consists of the temporal distributions of all the features taken into consideration and of trajectories reconstructed stemming from sequences of objects' centroids. The high accuracy of our tracking algorithm yields precise trajectories that characterize objects for all the time they stay in the scene. Accordingly, their analysis allowed us to develop effective algorithms to detect interesting object's events that are useful to infer their behaviour. In fact, the event detection rises not just from spatial analysis but also from temporal analysis. The most important basic event is the detection of stopped objects, since this implies the capability to detect permanencies circumscribed from both spatial and temporal points of view [S9]. These events and analyses could refer to objects in general, but people and vehicles represent a particular case with a high applicative spinoff. RESULTS

Through using our tracking algorithm, it is possible to either infer consumer's behaviour or monitor driver's behaviours

to detect, or even prevent, possible dangerous actions.

Fig. 3, first row, shows a couple of frames related to a busy urban intersection (left and middle), with the presence

of small motorbikes and vehicles, moving along different directions, as it can be seen in Fig. 3, right,

where the light red represents the most used trajectories. The middle image shows vehicles on the left that are tracked while

stopped at the traffic light. Also, virtual loops on the roadway keep track of the number of vehicles crossing them

along both directions. As one can see, each blob corresponds to one vehicle and the colour mask adheres very well to

the real object shape.

Fig. 3: First row: a couple of frames from a busy urban intersection (left and middle); the map of trajectories collected over six hours (right) - the most used in light red. Second row: a couple of frames from a narrow indoor passage (left), with the most walked paths (middle, in light red); a crowded situation where one blob is split into 13 people, tracked correctly (right). Fig. 4 shows some more interesting analysis related just to people tracking. The first row shows the possibility of counting people standing or moving in a scene (left) or crossing virtual lines (middle), stopped people (in the example, ID 238 is still). In the second row one can see the heat map (left), showing graphically the most busy zones (expressed in number of walked people), and the dwell map (right), showing where mostly people stop (expressed in time). Of course, detailed tabular data are also available for each tracked person.

Fig. 4: First row: seven moving people segmented and counted (left), three people crossing a virtual line (middle), four people in the scene, with one still (right). Second row: on the left, the heat map with the most walked zones (in red); on the right, the stopped map showing the averaged stopped time. In addition, our trajectory analysis permits to detect anomalous trajectories, where "anomalous" is of course an application-oriented definition. Fig. 5 shows some events and illegal behaviours detected in a video surveillance and in an automatic traffic monitoring application. First row, from left to right: normal situation when people go upstairs (green arrow), detection of anomalous event when they go downstairs (red arrow) or in case of running people (red arrow). Second row, from left to right: a stopped vehicle in the emergency lane, loss of a lorry's load, U-turn. On the third row, from left to right one can see a car changing lane (from 5 to 4) at the tollbooth, queue detection (where here the number of vehicles to trigger the events had been set to five, in lane 3), and finally lorry overtaking. In this last scene, the vehicles had been also classified 3+1 (motorbike, cars, lorries and X) by means of the multi-class hierarchical SVM (Support Vector Machine) algorithm we developed.

Fig. 5: some frames referring to as many detected events. First row, from left to right: normal situation when people go upstairs, alarm when they go downstairs, alarm when a running person is detected. Second row, from left to right: stopped vehicle, load loss from a lorry, U-turn. Third row, from left to right: lane change at a tollbooth, queue detection, lorry overtaking. INDUSTRIAL APPLICATION

|

||||||||||||||||||||||||||||||||

Copyright © 2008, A.G. - All Rights Reserved

|